DML: Build & Serve a Production-Ready Classifier in 1 Hour Using LLMs

Stop Manually Creating Your ML AWS Infrastructure - use Terraform! Build & Serve a Production-Ready Classifier in 1 Hour Using LLMs.

Hello there, I am Paul Iusztin 👋🏼

Within this newsletter, I will help you decode complex topics about ML & MLOps one week at a time 🔥

This week’s ML & MLOps topics:

Stop Manually Creating Your ML AWS Infrastructure. Use Terraform!

Build & Serve a Production-Ready Classifier in 1 Hour Using LLMs.

Before going into our subject of the day, I have some news to share with you 👀

If you want to 𝗾𝘂𝗶𝗰𝗸𝗹𝘆 𝗹𝗲𝗮𝗿𝗻 in a 𝘀𝘁𝗿𝘂𝗰𝘁𝘂𝗿𝗲𝗱 𝘄𝗮𝘆 how to 𝗯𝘂𝗶𝗹𝗱 𝗲𝗻𝗱-𝘁𝗼-𝗲𝗻𝗱 𝗠𝗟 𝘀𝘆𝘀𝘁𝗲𝗺𝘀 𝘂𝘀𝗶𝗻𝗴 𝗟𝗟𝗠𝘀, emphasizing 𝗿𝗲𝗮𝗹-𝘄𝗼𝗿𝗹𝗱 𝗲𝘅𝗮𝗺𝗽𝗹𝗲𝘀?

I want to let you know that ↓

I am invited on 𝗦𝗲𝗽𝘁𝗲𝗺𝗯𝗲𝗿 𝟮𝟴𝘁𝗵 to a 𝘄𝗲𝗯𝗶𝗻𝗮𝗿 to present an overview of the 𝗛𝗮𝗻𝗱𝘀-𝗼𝗻 𝗟𝗟𝗠𝘀 course I am creating.

I will show you a 𝗵𝗮𝗻𝗱𝘀-𝗼𝗻 𝗲𝘅𝗮𝗺𝗽𝗹𝗲 of how to 𝗯𝘂𝗶𝗹𝗱 𝗮 𝗳𝗶𝗻𝗮𝗻𝗰𝗶𝗮𝗹 𝗯𝗼𝘁 𝘂𝘀𝗶𝗻𝗴 𝗟𝗟𝗠𝘀. Here is what I will cover ↓

creating your Q&A dataset in a semi-automated way (OpenAI GPT)

fine-tuning an LLM on your new dataset using QLoRA (HuggingFace, Peft, Comet ML, Beam)

build a streaming pipeline to ingest news in real time into a vector DB (Bytewax, Qdrant, AWS)

build a financial bot based on the fine-tuned model and real-time financial news (LangChain, Comet ML, Beam)

build a simple UI to interact with the financial bot

❗No Notebooks or fragmented examples.

✅ I want to show you how to build a real product.

→ More precisely, I will focus on the engineering and system design, showing you how the components described above work together.

.

If this is something you want to learn, be sure to register using the link below ↓

↳🔗 Engineering an End-to-End ML System for a Financial Assistant Using LLMs (September 28th).

See you there 👀

Now back to business 🔥

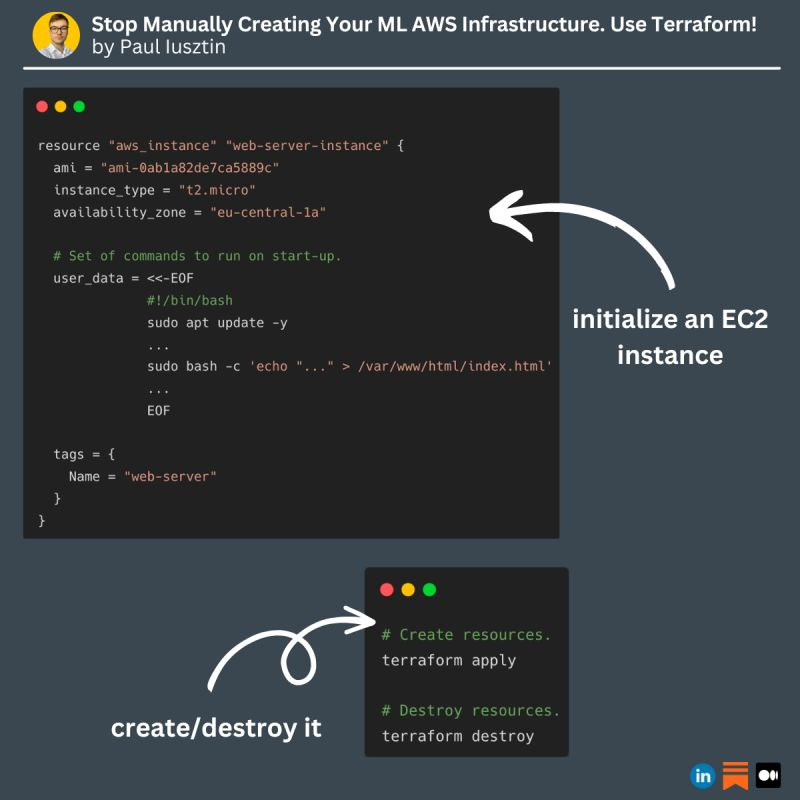

#1. Stop Manually Creating Your ML AWS Infrastructure. Use Terraform!

I was uselessly spending 1000$ dollars every month on cloud machines until I started using this tool 👇

Terraform!

.

𝐅𝐢𝐫𝐬𝐭, 𝐥𝐞𝐭'𝐬 𝐮𝐧𝐝𝐞𝐫𝐬𝐭𝐚𝐧𝐝 𝐰𝐡𝐲 𝐰𝐞 𝐧𝐞𝐞𝐝 𝐓𝐞𝐫𝐫𝐚𝐟𝐨𝐫𝐦.

When you want to deploy a software application, there are two main steps:

1. Provisioning infrastructure

2. Deploying applications

A regular workflow would be that before deploying your applications or building your CI/CD pipelines, you manually go and spin up your, let's say, AWS machines.

Initially, this workflow should be just fine, but there are two scenarios when it could get problematic.

#1. Your infrastructure gets too big and complicated. Thus, it is cumbersome and might yield bugs in manually replicating it.

#2. In the world of AI, there are many cases when you want to spin up a GPU machine to train your models, and afterward, you don't need it anymore. Thus, if you forget to close it, you will end up uselessly paying a lot of $$$.

With Terraform, you can solve both of these issues.

.

So...

𝐖𝐡𝐚𝐭 𝐢𝐬 𝐓𝐞𝐫𝐫𝐚𝐟𝐨𝐫𝐦?

It sits on the provisioning infrastructure layer as a: "infrastructure as code" tool that:

- is declarative (you focus on the WHAT, not on the HOW)

- automates and manages your infrastructure

- is open source

Yeah... yeah... that sounds fancy. But 𝐰𝐡𝐚𝐭 𝐜𝐚𝐧 𝐈 𝐝𝐨 𝐰𝐢𝐭𝐡 𝐢𝐭?

Let's take AWS as an example, where you have to:

- create a VPC

- create AWS users and permissions

- spin up EC2 machines

- install programs (e.g., Docker)

- create a K8s cluster

Using Terraform...

You can do all that just by providing a configuration file that reflects the state of your infrastructure.

Basically, it helps you create all the infrastructure you need programmatically. Isn't that awesome?

If you want to quickly understand Terraform enough to start using it in your own projects:

↳ check out my 7-minute read article: 🔗 Stop Manually Creating Your AWS Infrastructure. Use Terraform!

#2. Build & Serve a Production-Ready Classifier in 1 Hour Using LLMs

𝘓𝘓𝘔𝘴 𝘢𝘳𝘦 𝘢 𝘭𝘰𝘵 𝘮𝘰𝘳𝘦 𝘵𝘩𝘢𝘯 𝘤𝘩𝘢𝘵𝘣𝘰𝘵𝘴. 𝘛𝘩𝘦𝘴𝘦 𝘮𝘰𝘥𝘦𝘭𝘴 𝘢𝘳𝘦 𝘳𝘦𝘷𝘰𝘭𝘶𝘵𝘪𝘰𝘯𝘪𝘻𝘪𝘯𝘨 𝘩𝘰𝘸 𝘔𝘓 𝘴𝘺𝘴𝘵𝘦𝘮𝘴 𝘢𝘳𝘦 𝘣𝘶𝘪𝘭𝘵.

.

Using the standard approach when building an end-to-end ML application, you had to:

- get labeled data: 1 month

- train the model: 2 months

- serve de model: 3 months

These 3 steps might take ~6 months to implement.

So far, it worked great.

But here is the catch ↓

.

𝘠𝘰𝘶 𝘤𝘢𝘯 𝘳𝘦𝘢𝘤𝘩 𝘢𝘭𝘮𝘰𝘴𝘵 𝘵𝘩𝘦 𝘴𝘢𝘮𝘦 𝘳𝘦𝘴𝘶𝘭𝘵 𝘪𝘯 𝘢 𝘧𝘦𝘸 𝘩𝘰𝘶𝘳𝘴 𝘰𝘳 𝘥𝘢𝘺𝘴 𝘶𝘴𝘪𝘯𝘨 𝘢 𝘱𝘳𝘰𝘮𝘱𝘵-𝘣𝘢𝘴𝘦𝘥 𝘭𝘦𝘢𝘳𝘯𝘪𝘯𝘨 𝘢𝘱𝘱𝘳𝘰𝘢𝘤𝘩.

Let's take a classification task as an example ↓

𝗦𝘁𝗲𝗽 𝟭: You write a system prompt explaining the model and what types of inputs and outputs it will get.

"

You will be provided with customer service queries.

Classify each query into the following categories:

- Billing

- Account Management

- General Inquiry

"

𝗦𝘁𝗲𝗽 𝟮: You can give the model an example to make sure it understands the task (known as one-shot learning):

"

User: I want to know the price of the pro subscription plan.

Assistant: Billing

"

𝗦𝘁𝗲𝗽 𝟯: Attach the user prompt and create the input prompt, which now consists of the following:

- system

- example

- user

...prompts

𝗦𝘁𝗲𝗽 𝟰: Call the LLM's API... and boom, you built a classifier in under one hour.

Cool, right? 🔥

Using this approach, the only time-consuming step is to tweak the prompt until it reaches the desired result.

To conclude...

In today's LLMs world, to build a classifier, you have to write:

- a system prompt

- an example

- attach the user prompt

- pass the input prompt to the LLM API

That’s it for today 👾

See you next Thursday at 9:00 a.m. CET.

Have a fantastic weekend!

Paul

Whenever you’re ready, here is how I can help you:

The Full Stack 7-Steps MLOps Framework: a 7-lesson FREE course that will walk you step-by-step through how to design, implement, train, deploy, and monitor an ML batch system using MLOps good practices. It contains the source code + 2.5 hours of reading & video materials on Medium.

Machine Learning & MLOps Blog: in-depth topics about designing and productionizing ML systems using MLOps.

Machine Learning & MLOps Hub: a place where all my work is aggregated in one place (courses, articles, webinars, podcasts, etc.).