DML: Chain of Thought Reasoning: Write robust & explainable prompts for your LLM

Everything you need to know about chaining prompts: increase your LLMs accuracy & debug and explain your LLM.

Hello there, I am Paul Iusztin 👋🏼

Within this newsletter, I will help you decode complex topics about ML & MLOps one week at a time 🔥

This week’s ML & MLOps topics:

Chaining Prompts to Reduce Costs, Increase Accuracy & Easily Debug Your LLMs

Chain of Thought Reasoning: Write robust & explainable prompts for your LLM

Extra: Why any ML system should use an ML platform as its central nervous system

But first, I want to share with you this quick 7-minute guide teaching you how stable diffusion models are trained and generate new images.

Diffusion models are the cornerstone of most modern computer vision generative AI applications.

Thus, if you are into generative AI, it is essential to have an intuition of how a diffusion model works.

Check out my article to quickly understand:

- the general picture of how diffusion models work

- how diffusion models generate new images

- how they are trained

- how they are controlled by a given context (e.g., text)

↳🔗 Busy? This Is Your Quick Guide to Opening the Diffusion Models Black Box

#1. Chaining Prompts to Reduce Costs, Increase Accuracy & Easily Debug Your LLMs

Here it is ↓

𝗖𝗵𝗮𝗶𝗻𝗶𝗻𝗴 𝗽𝗿𝗼𝗺𝗽𝘁𝘀 is an intuitive technique that states that you must split your prompts into multiple calls.

𝗪𝗵𝘆? 𝗟𝗲𝘁'𝘀 𝘂𝗻𝗱𝗲𝗿𝘀𝘁𝗮𝗻𝗱 𝘁𝗵𝗶𝘀 𝘄𝗶𝘁𝗵 𝘀𝗼𝗺𝗲 𝗮𝗻𝗮𝗹𝗼𝗴𝗶𝗲𝘀.

When cooking, you are following a recipe split into multiple steps. You want to move to the next step only when you know what you have done so far is correct.

↳ You want every prompt to be simple & focused.

Another analogy is between reading all the code in one monolith/god class and using DRY to separate the logic between multiple modules.

↳ You want to understand & debug every prompt easily.

.

Chaining prompts is a 𝗽𝗼𝘄𝗲𝗿𝗳𝘂𝗹 𝘁𝗼𝗼𝗹 𝗳𝗼𝗿 𝗯𝘂𝗶𝗹𝗱𝗶𝗻𝗴 𝗮 𝘀𝘁𝗮𝘁𝗲𝗳𝘂𝗹 𝘀𝘆𝘀𝘁𝗲𝗺 where you must take different actions depending on the current state.

In other words, you control what happens between 2 chained prompts.

𝘉𝘺𝘱𝘳𝘰𝘥𝘶𝘤𝘵𝘴 𝘰𝘧 𝘤𝘩𝘢𝘪𝘯𝘪𝘯𝘨 𝘱𝘳𝘰𝘮𝘱𝘵𝘴:

- increase in accuracy

- reduce the number of tokens -> lower costs (skips steps of the workflow when not needed)

- avoid context limitations

- easier to include a human-in-the-loop -> easier to control, moderate, test & debug

- use external tools/plugins (web search, API, databases, calculator, etc.)

.

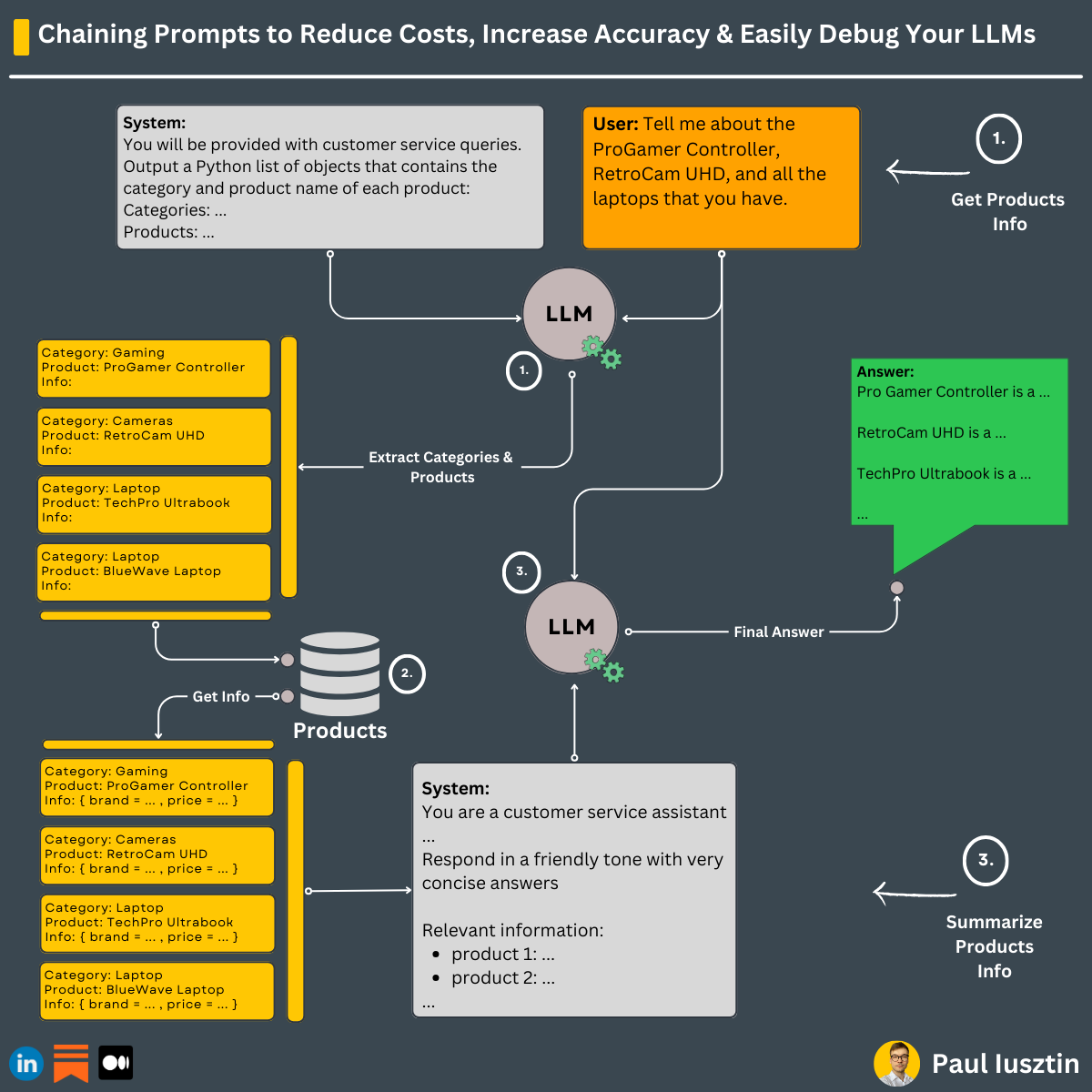

𝗘𝘅𝗮𝗺𝗽𝗹𝗲

You want to build a virtual assistant to respond to customer service queries.

Instead of adding in one single prompt the system message, all the available products, and the user inquiry, you can split it into the following:

1. Use a prompt to extract the products and categories of interest.

2. Enrich the context only with the products of interest.

3. Call the LLM for the final answer.

You can evolve this example by adding another prompt that classifies the nature of the user inquiry. Based on that, redirect it to billing, technical support, account management, or a general LLM (similar to the complex system of GPT-4).

𝗧𝗼 𝘀𝘂𝗺𝗺𝗮𝗿𝗶𝘇𝗲:

Instead of writing a giant prompt that includes multiple steps:

Split the god prompt into multiple modular prompts that let you keep track of the state externally and orchestrate the program.

In other words, you want modular prompts that you can combine easily (same as in writing standard functions/classes)

.

To 𝗮𝘃𝗼𝗶𝗱 𝗼𝘃𝗲𝗿𝗲𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴, use this technique when your prompt contains >= instruction.

You can leverage the DRY principle from software -> one prompt = one instruction.

↳🔗 Tools to chain prompts: LangChain

↳🔗 Tools to monitor and debug prompts: Comet LLMOps Tools

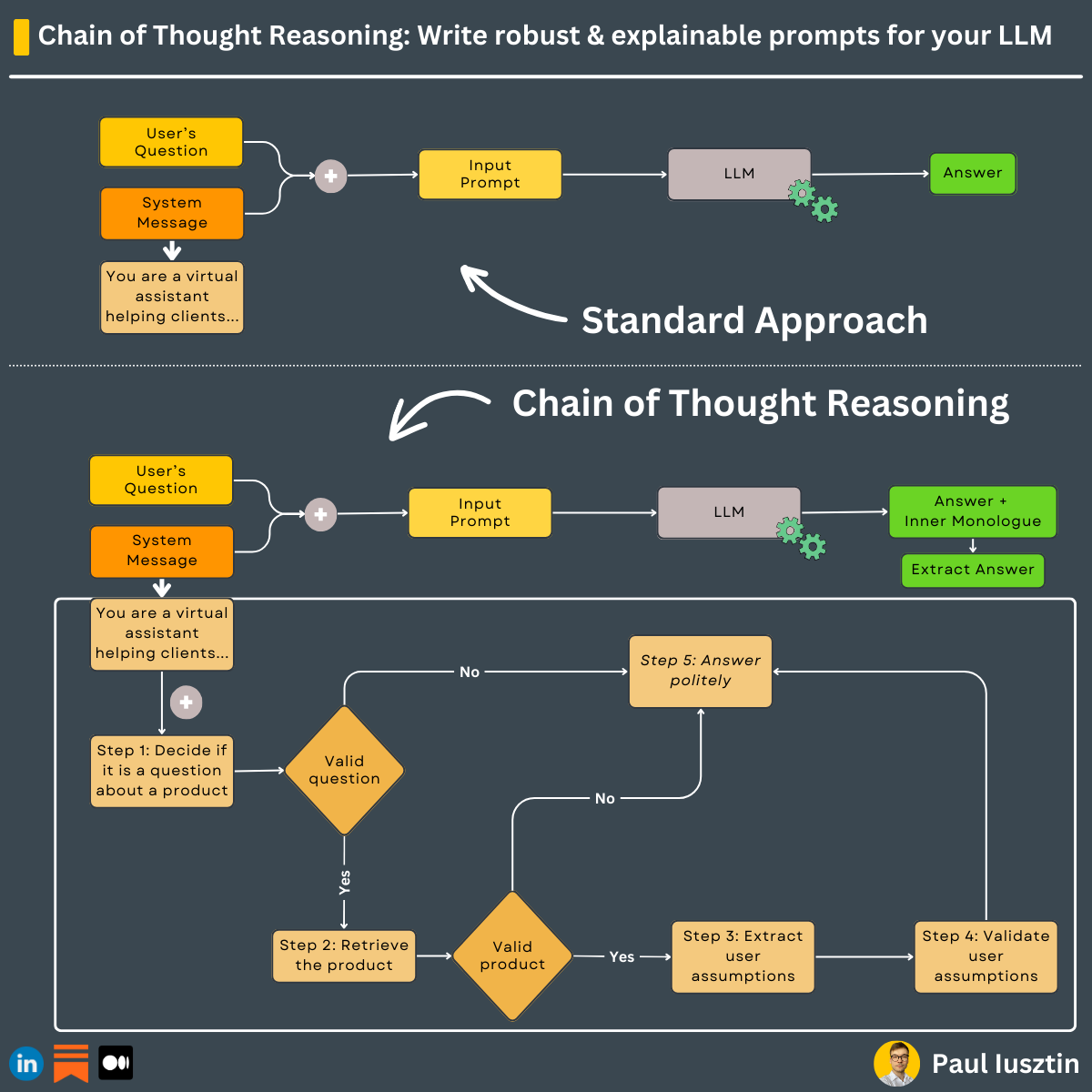

#2. Chain of Thought Reasoning: Write robust & explainable prompts for your LLM

𝗖𝗵𝗮𝗶𝗻 𝗼𝗳 𝗧𝗵𝗼𝘂𝗴𝗵𝘁 𝗥𝗲𝗮𝘀𝗼𝗻𝗶𝗻𝗴 is a 𝗽𝗼𝘄𝗲𝗿𝗳𝘂𝗹 𝗽𝗿𝗼𝗺𝗽𝘁 𝗲𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴 𝘁𝗲𝗰𝗵𝗻𝗶𝗾𝘂𝗲 to 𝗶𝗺𝗽𝗿𝗼𝘃𝗲 𝘆𝗼𝘂𝗿 𝗟𝗟𝗠'𝘀 𝗮𝗰𝗰𝘂𝗿𝗮𝗰𝘆 𝗮𝗻𝗱 𝗲𝘅𝗽𝗹𝗮𝗶𝗻 𝗶𝘁𝘀 𝗮𝗻𝘀𝘄𝗲𝗿.

Let me explain ↓

It is a method to force the LLM to follow a set of predefined steps.

🧠 𝗪𝗵𝘆 𝗱𝗼 𝘄𝗲 𝗻𝗲𝗲𝗱 𝗖𝗵𝗮𝗶𝗻 𝗼𝗳 𝗧𝗵𝗼𝘂𝗴𝗵𝘁 𝗥𝗲𝗮𝘀𝗼𝗻𝗶𝗻𝗴?

In complex scenarios, the LLM must thoroughly reason about a problem before responding to the question.

Otherwise, the LLM might rush to an incorrect conclusion.

By forcing the model to follow a set of steps, we can guide the model to "think" more methodically about the problem.

Also, it helps us explain and debug how the model reached a specific answer.

.

💡 𝗜𝗻𝗻𝗲𝗿 𝗠𝗼𝗻𝗼𝗹𝗼𝗴𝘂𝗲

The inner monologue is all the steps needed to reach the final answer.

Often, we want to hide all the reasoning steps from the end user.

In fancy words, we want to mimic an "inner monologue" and output only the final answer.

Each reasoning step is structured into a parsable format.

Thus, we can quickly load it into a data structure and output only the desired steps to the user.

.

↳ 𝗟𝗲𝘁'𝘀 𝗯𝗲𝘁𝘁𝗲𝗿 𝘂𝗻𝗱𝗲𝗿𝘀𝘁𝗮𝗻𝗱 𝘁𝗵𝗶𝘀 𝘄𝗶𝘁𝗵 𝗮𝗻 𝗲𝘅𝗮𝗺𝗽𝗹𝗲:

The input prompt to the LLM consists of a system message + the user's question.

The secret is in defining the system message as follows:

"""

You are a virtual assistant helping clients...

Follow the next steps to answer the customer queries.

Step 1: Decide if it is a question about a product ...

Step 2: Retrieve the product ...

Step 3: Extract user assumptions ...

Step 4: Validate user assumptions ...

Step 5: Answer politely ...

Make sure to answer in the following format:

Step 1: <𝘴𝘵𝘦𝘱_1_𝘢𝘯𝘴𝘸𝘦𝘳>

Step 2: <𝘴𝘵𝘦𝘱_2_𝘢𝘯𝘴𝘸𝘦𝘳>

Step 3: <𝘴𝘵𝘦𝘱_3_𝘢𝘯𝘴𝘸𝘦𝘳>

Step 4: <𝘴𝘵𝘦𝘱_4_𝘢𝘯𝘴𝘸𝘦𝘳>

Response to the user: <𝘧𝘪𝘯𝘢𝘭_𝘳𝘦𝘴𝘱𝘰𝘯𝘴𝘦>

"""

Enforcing the LLM to follow a set of steps, we ensured it would answer the right questions.

Ultimately, we will show the user only the <𝘧𝘪𝘯𝘢𝘭_𝘳𝘦𝘴𝘱𝘰𝘯𝘴𝘦> subset of the answer.

The other steps (aka "inner monologue") help:

- the model to reason

- the developer to debug

Have you used this technique when writing prompts?

Extra: Why any ML system should use an ML platform as its central nervous system

Any ML system should use an ML platform as its central nervous system.

Here is why ↓

The primary role of an ML Platform is to bring structure to your:

- experiments

- visualizations

- models

- datasets

- documentation

Also, its role is to decouple your data preprocessing, experiment, training, and inference pipelines.

.

An ML platform helps you automate everything mentioned above using these 6 features:

1. experiment tracking: log & compare experiments

2. metadata store: know how a model (aka experiment) was generated

3. visualisations: a central hub for your visualizations

4. reports: create documents out of your experiments

5. artifacts: version & share your datasets

6. model registry: version & share your models

I have used many ML Platforms before, but lately, I started using Comet, and I love it.

↳🔗 Comet ML

What is your favorite ML Platform?

That’s it for today 👾

See you next Thursday at 9:00 a.m. CET.

Have a fantastic weekend!

Paul

Whenever you’re ready, here is how I can help you:

The Full Stack 7-Steps MLOps Framework: a 7-lesson FREE course that will walk you step-by-step through how to design, implement, train, deploy, and monitor an ML batch system using MLOps good practices. It contains the source code + 2.5 hours of reading & video materials on Medium.

Machine Learning & MLOps Blog: in-depth topics about designing and productionizing ML systems using MLOps.

Machine Learning & MLOps Hub: a place where all my work is aggregated in one place (courses, articles, webinars, podcasts, etc.).