Framework for Measuring Generative AI Risk Score!

Enhancing Enterprise ML with GenAI Risk Score, Mastering Advanced ML Concepts, and Introducing RestGPT for API Integration

Decoding ML Notes

Hey everyone, Happy Saturday!

Today, we move on to part two of our exciting series. We're sharing "DML Notes" every week.

This series offers quick summaries, helpful hints, and advice about Machine Learning & MLOps engineering in a short and easy-to-understand format.

Every Saturday, "DML Notes" will bring you a concise, easy-to-digest roundup of the most significant concepts and practices in MLE, Deep Learning, and MLOps. We aim to craft this series to enhance your understanding and keep you updated while respecting your time (2-3 minutes) and curiosity.

This week’s topics:

GenAI Risk Score framework when developing enterprise ML projects

Deep Dive into ML: Beyond the Basics to System Mastery

RestGPT Architecture: Connecting Large Language Models with Real-World RESTful APIs !

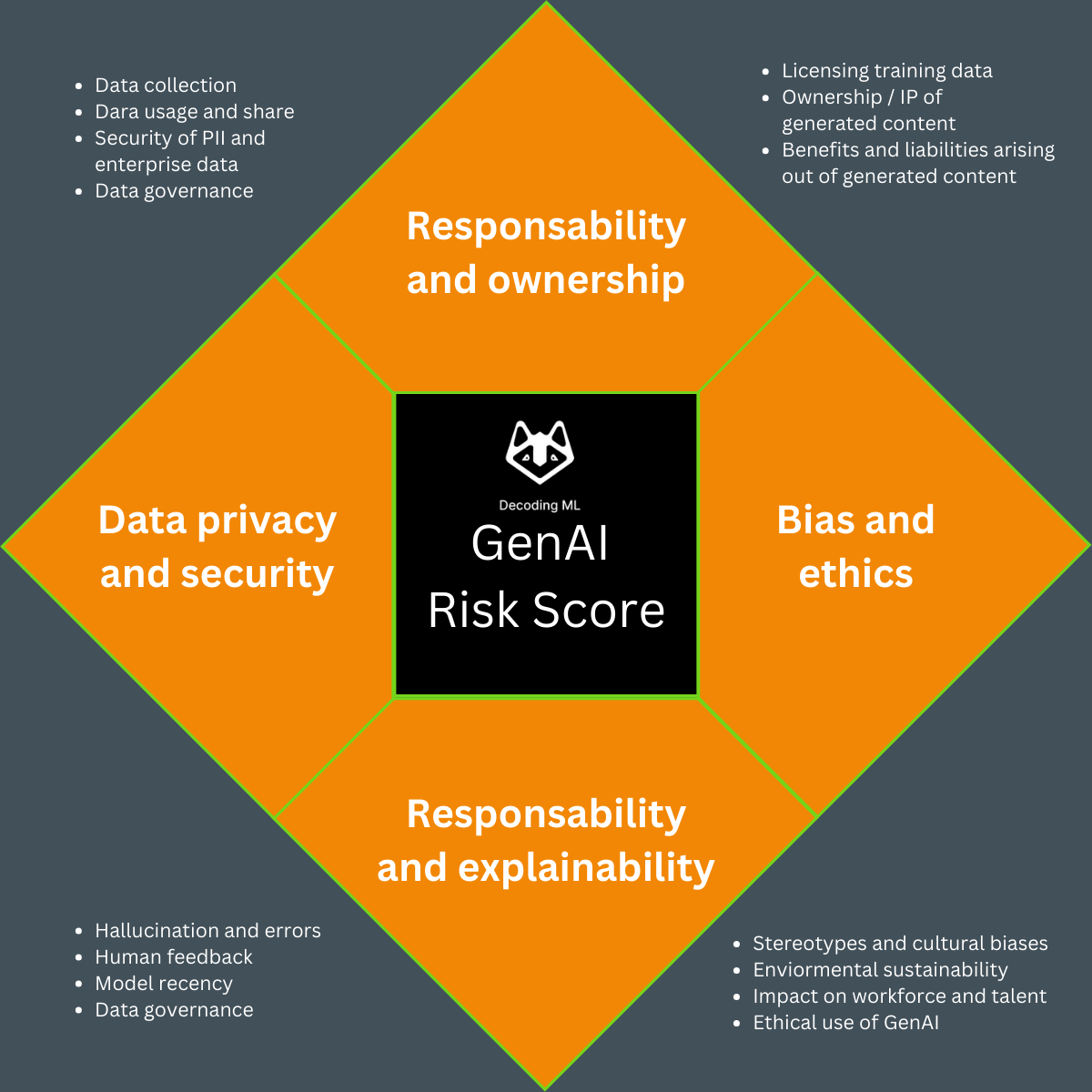

1. GenAI Risk Score Framework

In my daily work, I use a framework called 'GenAI Risk Score' which protects the business and outcome of the product.

Using LLM algorithms in production is not all about the result. It's also about data privacy, algorithm bias, evaluation, and ownership of data and content generated.

🔐 Data Privacy and Security: It's not just about collecting data; it's how we use, share, and protect personally identifiable information (PII) and maintain robust data governance standards.

📜 Responsibility and Ownership: The discourse isn't complete without addressing the licensing of training data, the intricacies of ownership and intellectual property rights, and the benefits versus liabilities of generated content.

⚖️ Bias and Ethics: From tackling stereotypes and cultural biases to ensuring environmental sustainability and ethical AI use, this quadrant reminds us of the broader societal implications of AI technology.

🤖 Responsibility and Explainability: The importance of minimizing hallucination and errors, incorporating human feedback, ensuring model recency, and upholding data governance is crucial for creating trustworthy AI systems.

In my daily work, I use a framework called 'GenAI Risk Score' which protects the business and outcome of the product.

Using LLM algorithms in production is not all about the result. It's also about data privacy, algorithm bias, evaluation, and ownership of data and content generated.

🔐 Data Privacy and Security: It's not just about collecting data; it's how we use, share, and protect personally identifiable information (PII) and maintain robust data governance standards.

📜 Responsibility and Ownership: The discourse isn't complete without addressing the licensing of training data, the intricacies of ownership and intellectual property rights, and the benefits versus liabilities of generated content.

⚖️ Bias and Ethics: From tackling stereotypes and cultural biases to ensuring environmental sustainability and ethical AI use, this quadrant reminds us of the broader societal implications of AI technology.

🤖 Responsibility and Explainability: The importance of minimizing hallucination and errors, incorporating human feedback, ensuring model recency, and upholding data governance is crucial for creating trustworthy AI systems.

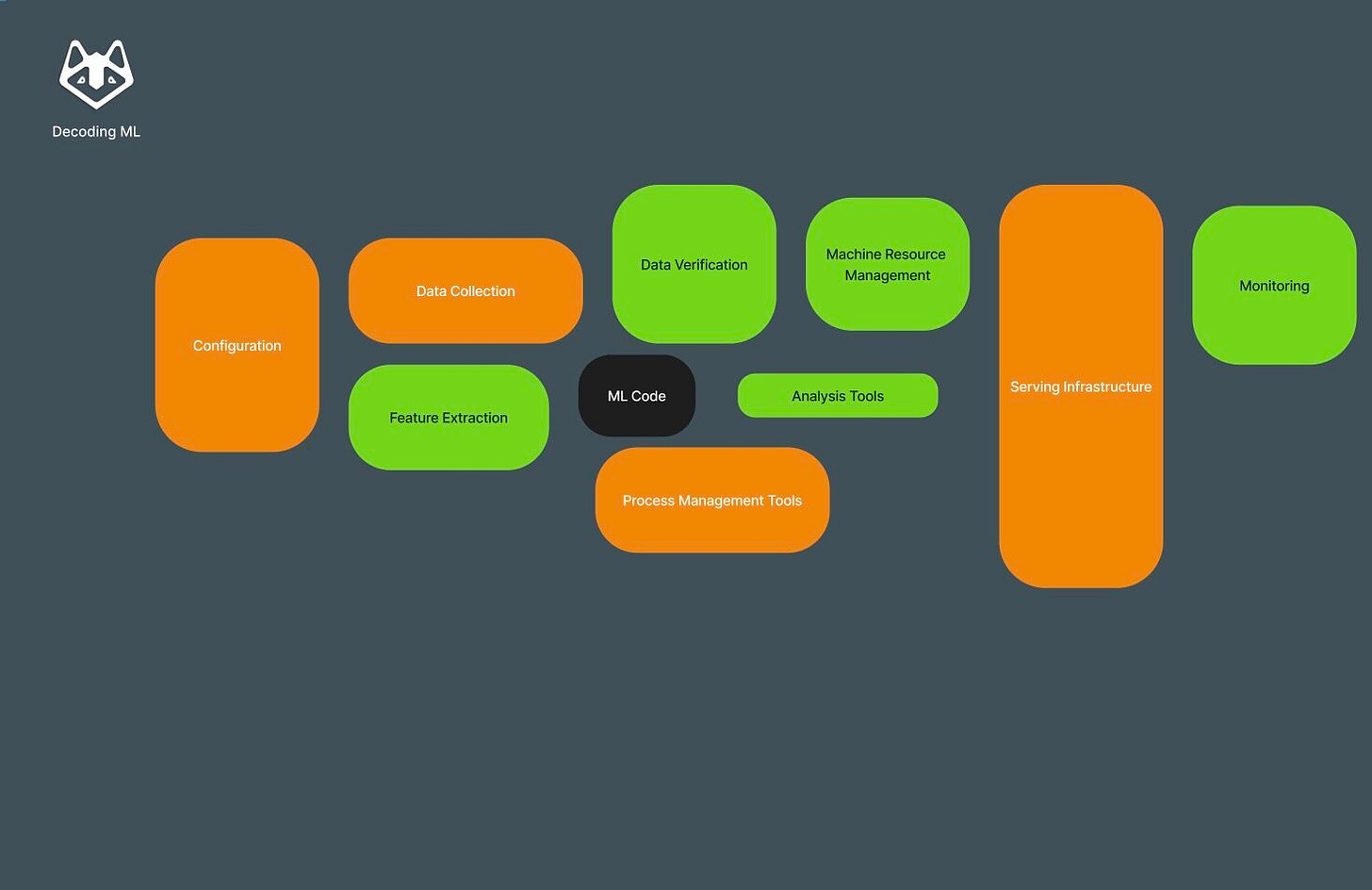

2. Deep Dive into ML: Beyond the Basics to System Mastery

As the field of MLOps has grown, bringing with it an array of tools and methodologies, the role of ML code has shifted. It's now just one piece of a much larger puzzle.

Top 8 practical issues:

🎯 Understand the ML model lifecycle and how to adapt it to specific use cases.

🎯Comprehend how an ML model integrates within the broader system architecture.

🎯Ensure seamless communication between the ML model and other system components.

🎯Embrace knowledge in data engineering and system design patterns without hesitation.

🎯Recognize the nuances between staging environments and production.

🎯Plan for deployment and usage of the ML model in production settings.

🎯Learn about the cloud. Choose a provider and experiment. Don't wait for the Ops guy to deploy your model.

🎯Be prepared to explain ML model predictions to other engineers or clients, enhancing transparency and trust.

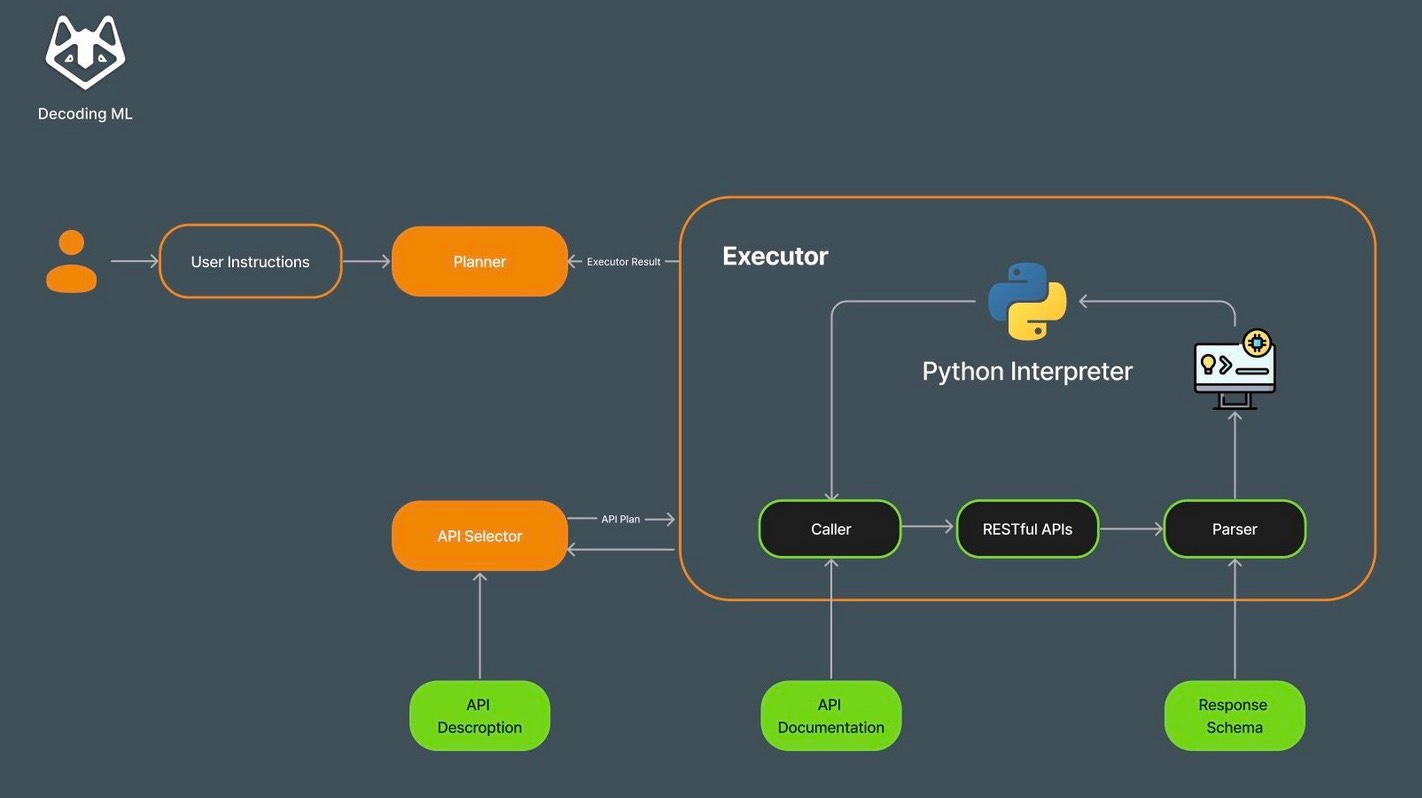

3. RestGPT Architecture: Connecting Large Language Models with Real-World RESTful APIs

This research introduces RestGPT, a novel framework that seamlessly integrates Large Language Models (LLMs) like GPT-3 with RESTful APIs, unlocking new potentials for AI applications in real-world scenarios.

🔍 Key Takeaways:

The RestGPT architecture has 3 big components:

1️⃣ Planner is like the brainy strategist, breaking down complex tasks into bite-sized pieces. It’s like planning a big project by first listing out smaller, manageable tasks.

2️⃣ API Selector is the savvy connector, picking the right tools (APIs) for each task. Imagine you’re cooking a special dish and need the perfect ingredients; API Selector knows exactly what you need and where to find it.

3️⃣ Executor is the doer, turning plans into action by talking to APIs and making things happen. It’s like having a personal assistant who not only knows what you need but also gets it done.

🌟 Implications for the Industry:

🧑🏾💻 For Developers: RestGPT simplifies the process of incorporating complex API interactions into AI-driven applications.

Imagine building a chatbot that can not only understand user queries but also fetch real-time data from various web services without manual coding for each API. This significantly reduces development time and opens up creative avenues for new features and functionalities.

💼 For Businesses: This technology promises to change product offerings, enabling businesses to deliver more sophisticated and personalized user experiences.

Companies can automate tasks that previously required human intervention, such as customer support, data analysis, and even decision-making processes, thereby increasing efficiency and reducing costs.

💻 Implementation Takeaways:

1️⃣ This architecture can be extended also with private LLM like Llama2 or Mixtral

2️⃣ Imagine making it even better: a user feedback loop could seriously spice up the executor component.

3️⃣ The API documentation must be very well written with all the implementation details.