Important Recap and What's Next

Past series and our new one on AI Agents. Everything open-source!

We’re officially Decoding AI Magazine! If you missed the announcement, you can catch up here.

Our playbook stays the same. When there’s a complex topic, we give you the roadmap.

We did it with Anca Ioana Muscalagiu’s series on designing MCP systems:

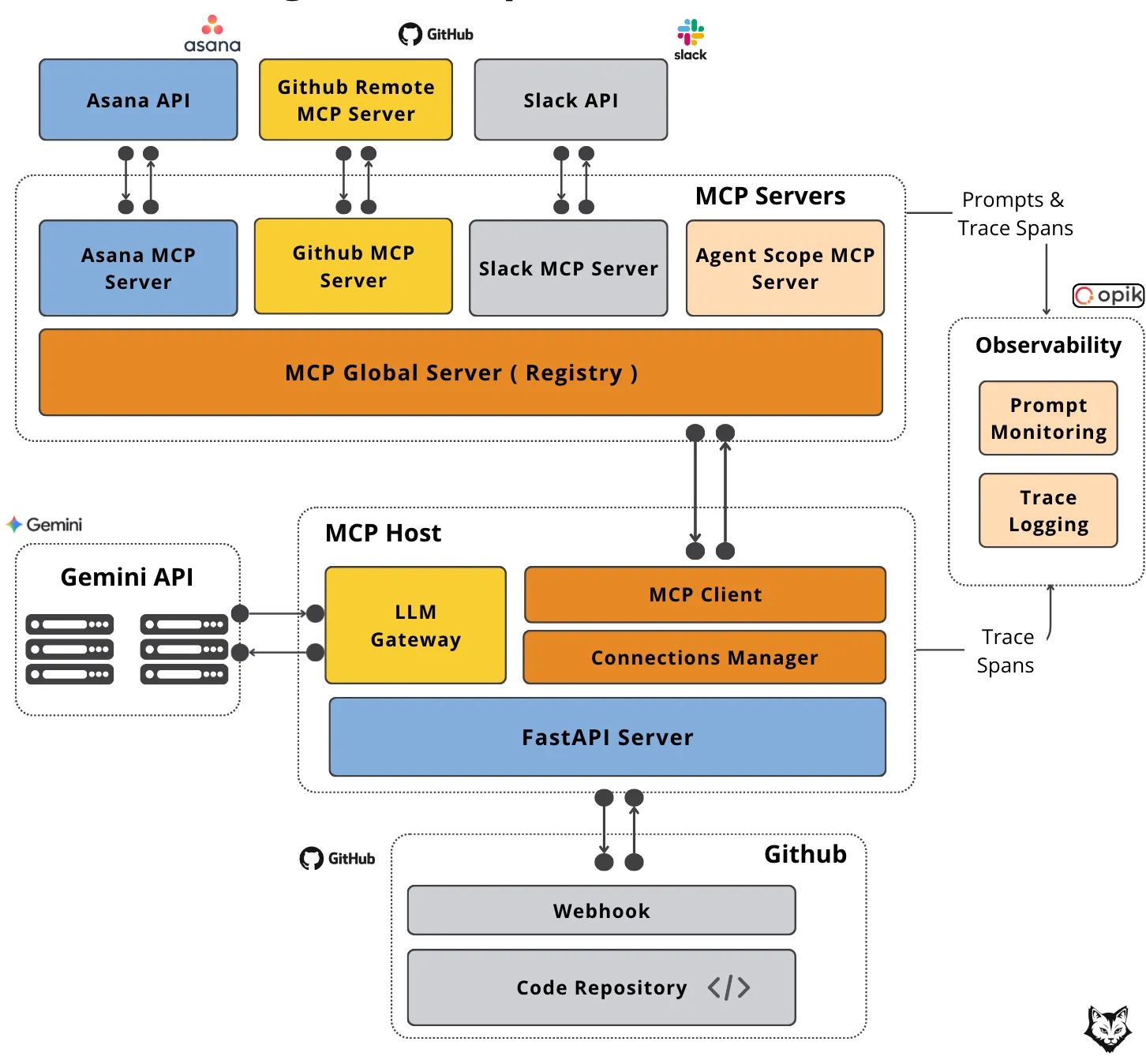

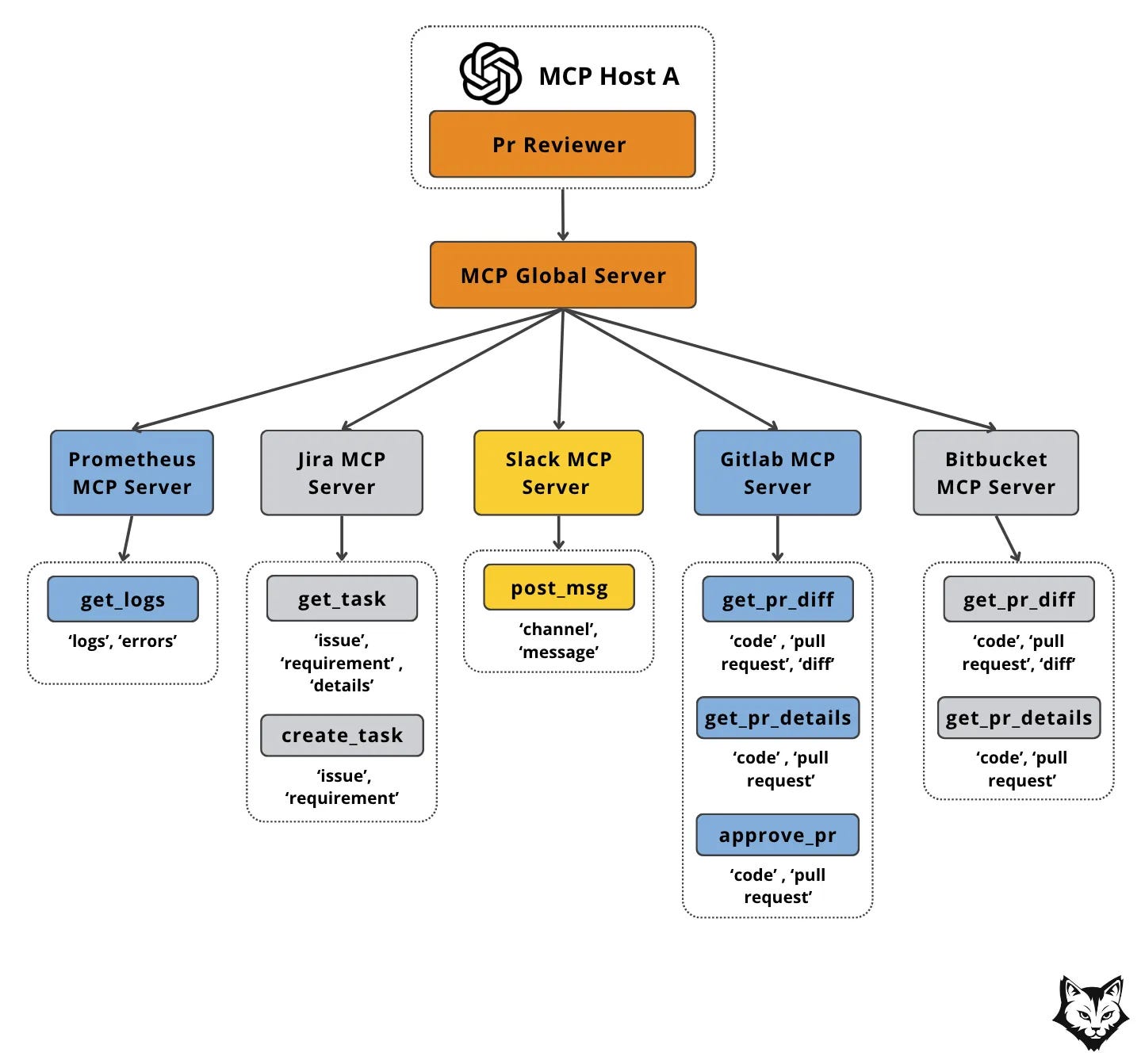

An open-source course that teaches you how to build a production-grade AI Pull Request reviewer from scratch using MCP, microservices and a real-world engineering mindset.

Lesson 1: Why MCP Breaks Old Enterprise AI Architectures

This lesson explains how MCP’s modular approach breaks apart old, monolithic AI systems to create a scalable and reusable architecture (using an AI PR Reviewer use case as an example). Read more.

Lesson 2: Build with MCP Like a Real Engineer

Once you understand the architecture, the next lesson gets extremely hands-on. Using FastMCP, we implement an enterprise-ready AI PR Reviewer from scratch, integrated with GitHub, Asana, and Slack. Read more.

Lesson 3: Getting Agent Architecture Right

Finally, with the intuition in place, this lesson guides you through refactoring our AI PR Reviewer project using the 5 most popular agent-based architectures. Read more.

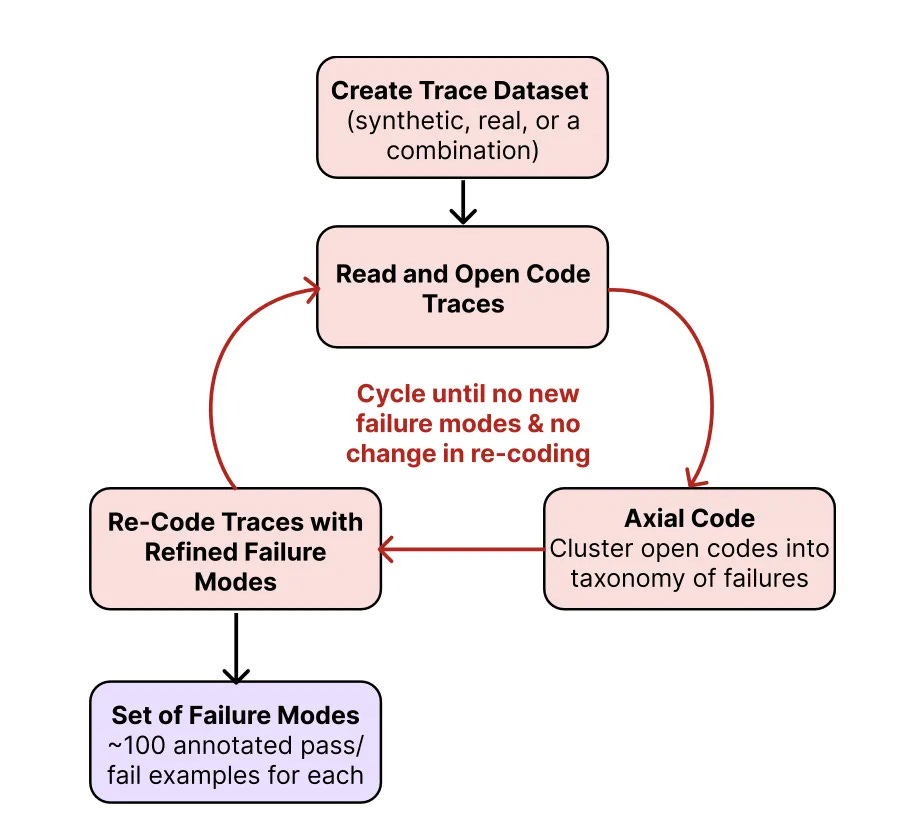

… and with Hamel Husain’s series on AI Evals:

This series teaches proven methods to measure, compare, and improve your AI application using the crown jewel of AI Engineering: AI Evals. Ultimately, enabling you to build products that consistently outperform the competition.

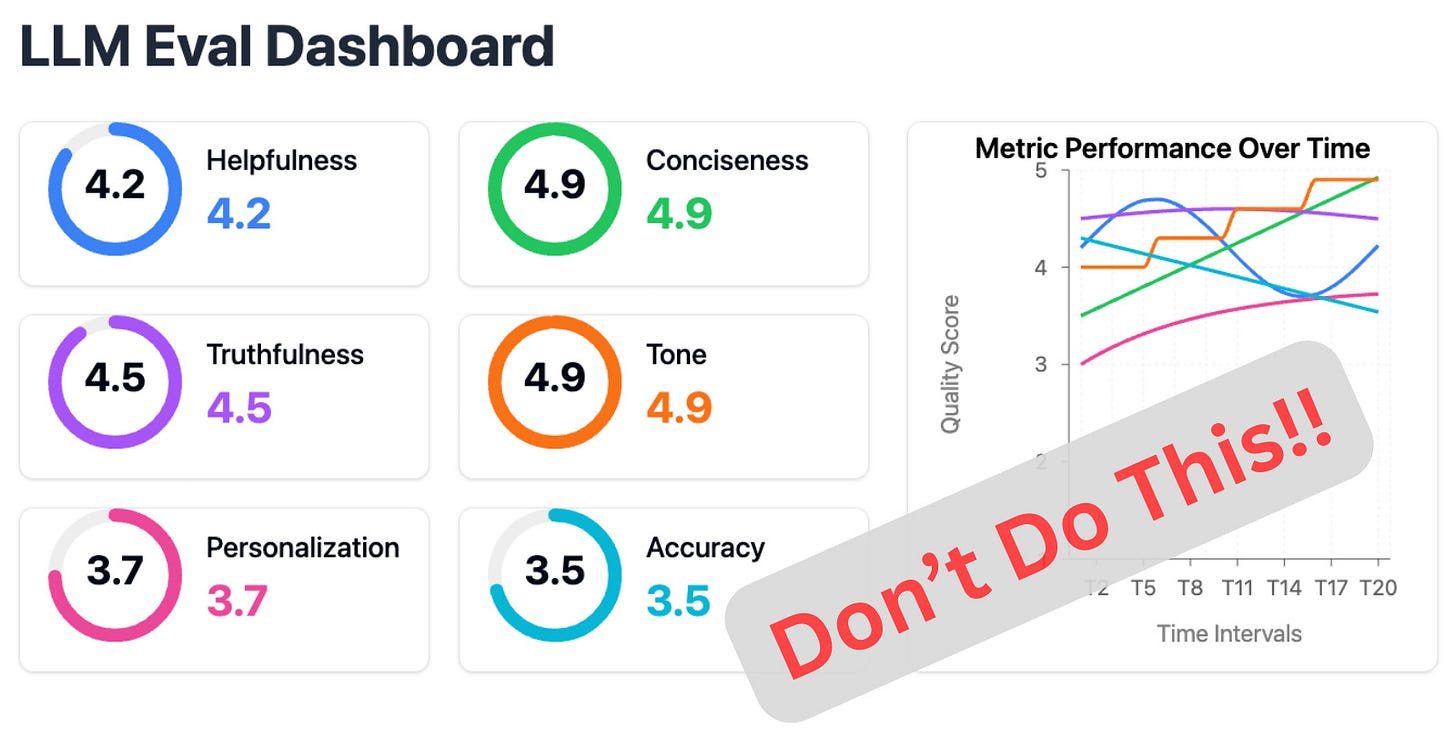

The Mirage of Generic AI Metrics

Learn why chasing generic, off-the-shelf metrics is a dead end that won’t actually tell you if your AI product is any good. Read more.

The 5-Star Lie: You Are Doing AI Evals Wrong

See why simple 5-star ratings are a trap and learn the hands-on approach that works. Read more.

What’s next?

We’ve been working on a full course on AI Agents, with the Towards AI team, and we realized the fundamentals were too important not to share with everyone—for free.

So, we’re bringing you an 8-part series on the AI Agents Foundations.

This isn't another hype series. It's the stuff you actually need to build real-world agents that work.

Here's what’s coming:

Workflow vs. Agents. Where to even begin?

Structured Outputs. Getting reliable, predictable results.

Workflow Patterns. Designing agentic systems that don't fail.

Tools. Giving your agents superpowers with function calling.

Planning. How agents think (ReAct vs. Plan-and-Execute).

Looking into LangGraph’s ReAct implementation.

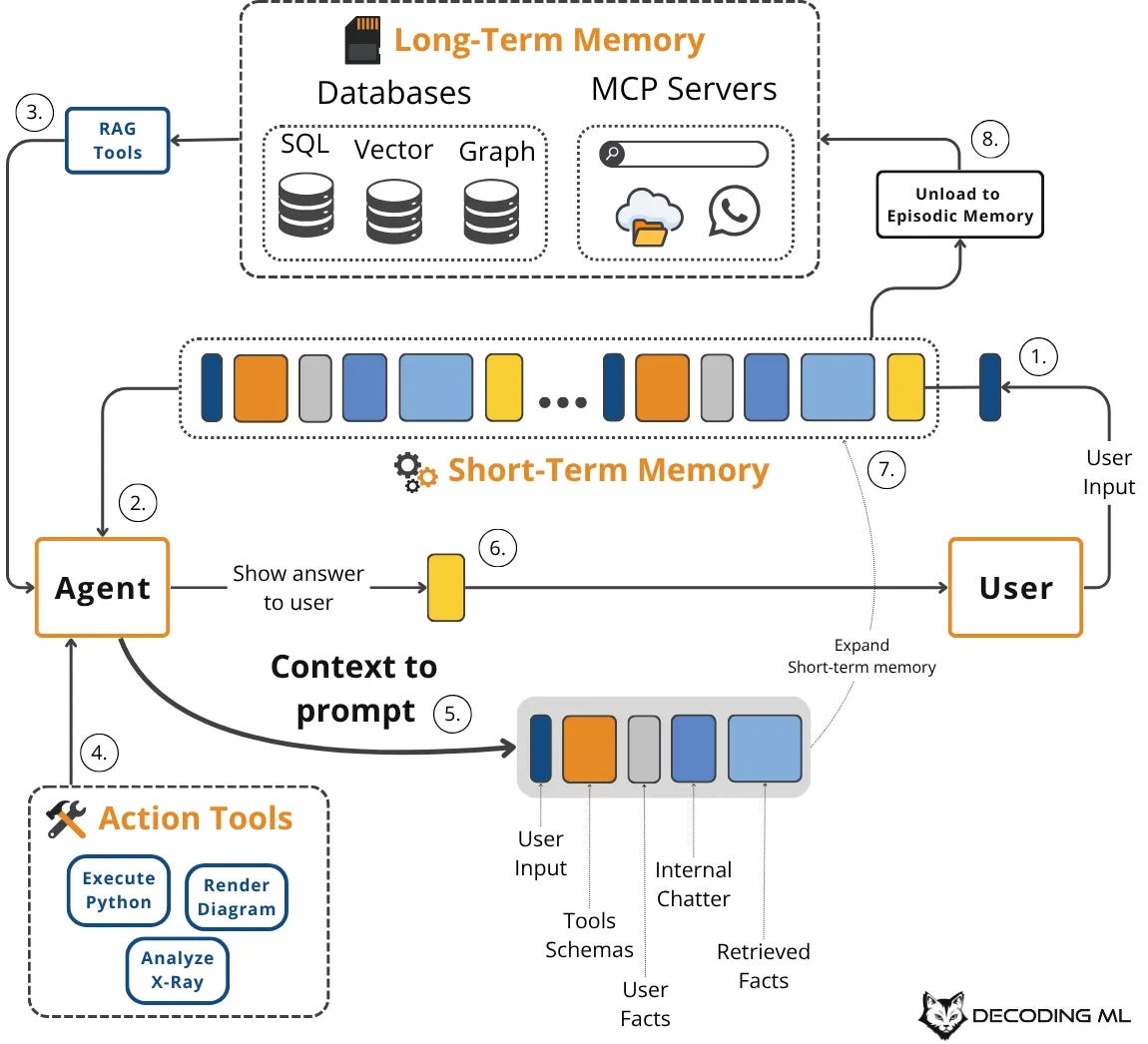

Memory. How agents remember and learn.

Working with Multimodal Data. Integrating images and other data types.

The first article, "Workflow vs. Agents", drops next Tuesday, October 7, 2025.

See you next week.

👋 I’d love your feedback to help improve the Decoding AI Magazine.

Share what you want to see next or your top takeaway. I read and reply to every comment!

Whenever you’re ready, there are 3 ways we can help you:

Perks: Exclusive discounts on our recommended learning resources

(books, live courses, self-paced courses and learning platforms).

The LLM Engineer’s Handbook: Our bestseller book on teaching you an end-to-end framework for building production-ready LLM and RAG applications, from data collection to deployment (get up to 20% off using our discount code).

Free open-source courses: Master production AI with our end-to-end open-source courses, which reflect real-world AI projects and cover everything from system architecture to data collection, training and deployment.

Images

If not otherwise stated, all images are created by the author.

Such a good read. Do you have examples of use cases when MCP implementations had success? Ex Jira MCP using Claude is great. I feel that the majority of MCPs are for llm user facing apps like Claude. Generally B2C. Seeing more people building MCPs than actually using them. Thoughts?