PI #005: Harnessing the Strength of the Batch Architecture in Serving ML Models

Serving an ML Model Using a Batch Architecture. The Perfect DUO: FastAPI + Streamlit

This newsletter aims to give you weekly insights about designing and productionizing ML systems using MLOps good practices 🔥.

This week I will go over the following:

Why serving an ML model using a batch architecture is so powerful?

The Perfect DUO: FastAPI + Streamlit

Also, I have some exciting news to share with you guys 🎉

Why serving an ML model using a batch architecture is so powerful?

Why serving an ML model using a batch architecture is so powerful?

When you first start deploying your ML model, you want an initial end-to-end flow as fast as possible.

Doing so lets you quickly provide value, get feedback, and even collect data.

But here is the catch...

Successfully serving an ML model is tricky as you need many iterations to optimize your model to work in real-time:

- low latency

- high throughput

Initially, serving your model in batch mode is like a hack.

By storing the model's predictions in dedicated storage, you automatically move your model from offline mode to a real-time online model.

Thus, you no longer have to care for your model's latency and throughput. The consumer will directly load the predictions from the given storage.

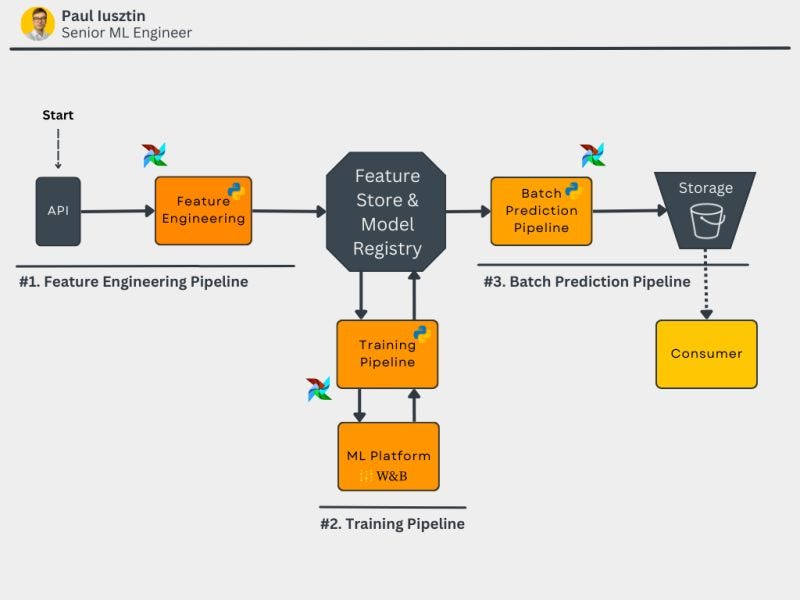

𝐓𝐡𝐞𝐬𝐞 𝐚𝐫𝐞 𝐭𝐡𝐞 𝐦𝐚𝐢𝐧 𝐬𝐭𝐞𝐩𝐬 𝐨𝐟 𝐚 𝐛𝐚𝐭𝐜𝐡 𝐚𝐫𝐜𝐡𝐢𝐭𝐞𝐜𝐭𝐮𝐫𝐞:

- extracts raw data from a real data source

- clean, validate, and aggregate the raw data within a feature pipeline

- load the cleaned data into a feature store

- experiment to find the best model + transformations using the data from the feature store

- upload the best model from the training pipeline into the model registry

- inside a batch prediction pipeline, use the best model from the model registry to compute the predictions

- store the predictions in some storage

- the consumer will download the predictions from the storage

- repeat the whole process hourly, daily, weekly, etc. (it depends on your context)

The main downside of deploying your model in batch mode is that the predictions will have a level of lag.

For example, in a recommender system, if you make your predictions daily, it won't capture a user's behavior in real-time, and it will update the predictions only at the end of the day.

That is why moving to other architectures, such as request-response or streaming, will be natural after your system matures in batch mode.

So remember, when you initially deploy your model, using a batch mode architecture will be your best shot for a good user experience.

Let me know in the comments what your strategy is.

Want to 𝗹𝗲𝗮𝗿𝗻 𝗠𝗟 & 𝗠𝗟𝗢𝗽𝘀 𝗶𝗻 𝗮 𝘀𝘁𝗿𝘂𝗰𝘁𝘂𝗿𝗲𝗱 𝘄𝗮𝘆? After 6 months of work, I finally finished 𝘛𝘩𝘦 𝘍𝘶𝘭𝘭 𝘚𝘵𝘢𝘤𝘬 7-𝘚𝘵𝘦𝘱𝘴 𝘔𝘓𝘖𝘱𝘴 𝘍𝘳𝘢𝘮𝘦𝘸𝘰𝘳𝘬 Medium series.

In 2.5 hours of reading & video materials, you will learn how to:

- design a batch-serving architecture

- use Hopsworks as a feature store

- design a feature engineering pipeline that reads data from an API

- build a training pipeline with hyper-parameter tunning

- use W&B as an ML Platform to track your experiments, models, and metadata

- implement a batch prediction pipeline

- use Poetry to build your own Python packages

- deploy your own private PyPi server

- orchestrate everything with Airflow

- use the predictions to code a web app using FastAPI and Streamlit

- use Docker to containerize your code

- use Great Expectations to ensure data validation and integrity

- monitor the performance of the predictions over time

- deploy everything to GCP

- build a CI/CD pipeline using GitHub Actions

- trade-offs & future improvements discussion

𝗬𝗼𝘂 𝗰𝗮𝗻 𝗮𝗰𝗰𝗲𝘀𝘀 𝘁𝗵𝗲 𝗰𝗼𝘂𝗿𝘀𝗲 𝗼𝗻:

➝ 𝘔𝘦𝘥𝘪𝘶𝘮'𝘴 𝘛𝘋𝘚 𝘱𝘶𝘣𝘭𝘪𝘤𝘢𝘵𝘪𝘰𝘯: text tutorials + videos

➝ 𝘎𝘪𝘵𝘏𝘶𝘣: open-source code + docs

I published the course on Medium's TDS publication to make it accessible to as many people as people. Thus 👇

... anyone can learn the fundamentals of MLE & MLOps.

So no more excuses. Just go and build your own project 🔥

🔗 GitHub Code.

The Medium link above might not work well on mobile. So here are the first lessons (you will find the rest of them within the articles):

🔗 Lesson 1: A Framework for Building a Production-Ready Feature Engineering Pipeline

🔗 Lesson 2: A Guide to Building Effective Training Pipelines for Maximum Results

🔗 Lesson 4: Unlocking MLOps using Airflow: A Comprehensive Guide to ML System Orchestration

I worked hard to provide you with a seamless experience while doing the course. Let me know on LinkedIn if you have any questions and what was your experience. Thanks ✌🏻

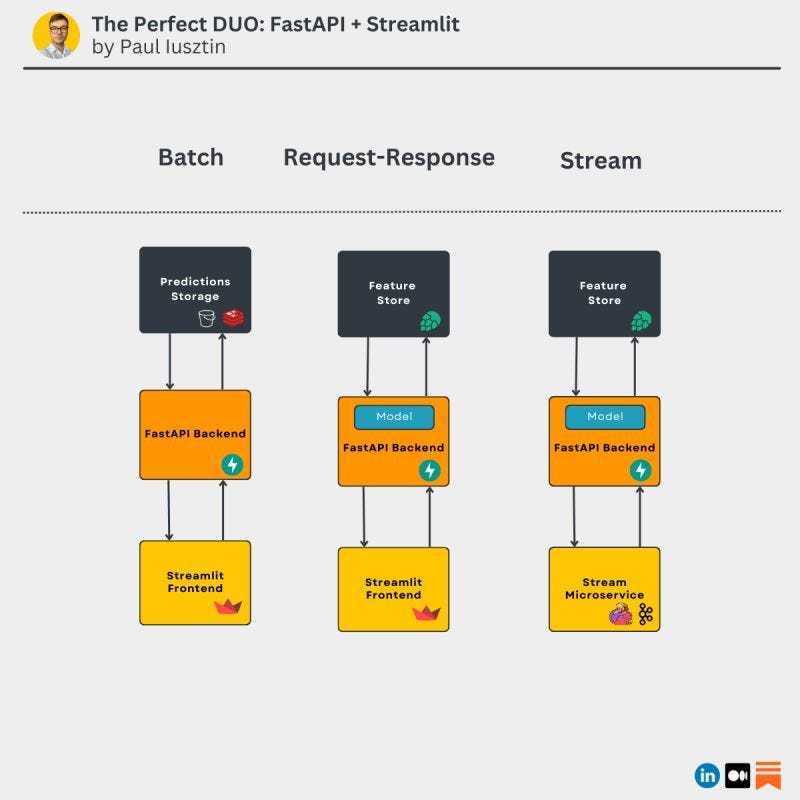

The Perfect DUO: FastAPI + Streamlit

2 tools you should know as an ML Engineer

Here are 2 reasons why FastAPI & Streamlit should be in your MLE stack 👇

#𝟭. 𝗣𝘆𝘁𝗵𝗼𝗻, 𝗣𝘆𝘁𝗵𝗼𝗻, 𝗣𝘆𝘁𝗵𝗼𝗻!

As an MLE, Python is your magic wand.

Using FastAPI & Streamlit, you can build full-stack web apps using solely Python.

#𝟮. 𝗘𝘅𝘁𝗿𝗲𝗺𝗲𝗹𝘆 𝗳𝗹𝗲𝘅𝗶𝗯𝗹𝗲

Using FastAPI & Streamlit, you can deploy an ML model in almost any scenario.

<< 𝘉𝘢𝘵𝘤𝘩 >>

Expose the predictions from any storage, such as S3 or Redis, using FastAPI as REST endpoints.

Visualize the predictions using Streamlit by calling the FastAPI REST endpoints.

<< 𝘙𝘦𝘲𝘶𝘦𝘴𝘵-𝘙𝘦𝘴𝘱𝘰𝘯𝘴𝘦 >>

Wrap your model using FastAPI and expose its functionalities as REST endpoints.

Yet again... visualize the predictions using Streamlit by calling the FastAPI REST endpoints.

<< 𝘚𝘵𝘳𝘦𝘢𝘮 >>

Wrap your model using FastAPI and expose it as REST endpoints.

But this time, the REST endpoints will be called from a Flink or Kafka Streams microservice.

.

Using this tech stack won't be the most optimal solution in 100% use cases,

... but in most cases:

- it will get the job done

- you can quickly prototype almost any ML application.

So remember…

You should learn FastAPI & Streamlit because:

- Python all the way!

- you can quickly deploy a model in almost any architecture scenario

Do you use FastAPI & Streamlit?

To learn more, check out Lesson 6 of my MLE & MLOps course: 𝘍𝘢𝘴𝘵𝘈𝘗𝘐 𝘢𝘯𝘥 𝘚𝘵𝘳𝘦𝘢𝘮𝘭𝘪𝘵: 𝘛𝘩𝘦 𝘗𝘺𝘵𝘩𝘰𝘯 𝘋𝘶𝘰 𝘠𝘰𝘶 𝘔𝘶𝘴𝘵 𝘒𝘯𝘰𝘸 𝘈𝘣𝘰𝘶𝘵.

Also, I want to let you know that DeepLearning.ai will soon release "The AI for Good Specialization"

"It is a beginner-friendly 3-course program that will teach you how to combine human and machine intelligence to create a positive social impact."

I am happy and excited that they take this stuff seriously and educate people about when & if you should use AI.

...and, of course, about its risks.

Pre-enroll now and get 14 free days.

This is not a promotional message. I am just excited to see something like this exists.

See you next week on Thursday at 9:00 am CET.

Have a fabulous weekend!

💡 My goal is to help machine learning engineers level up in designing and productionizing ML systems. Follow me on LinkedIn and Medium for more insights!

🔥 If you enjoy reading articles like this and wish to support my writing, consider becoming a Medium member. Using my referral link, you can support me without extra cost while enjoying limitless access to Medium's rich collection of stories.

Thank you ✌🏼 !