PI #007: Airflow - One Piece of Infra All ML Pipelines Must Have

The Benefits of Using Airflow. How to Integrate Airflow in Your Python Code.

This newsletter aims to give you weekly insights about designing and productionizing ML systems using MLOps good practices 🔥.

This week I will go over the following:

3 key non-obvious benefits of using an orchestration tool such as Airflow for your ML pipeline.

Integrating Airflow with your Python code in a modular and flexible way.

🎉 Also, I am thrilled to let you know that I recently started working at Metaphysic, a generative AI company.

👀 Thus, along with my MLE and MLOps content, I will start talking about generative AI from my real-world experience with the field.

3 key non-obvious benefits of using an orchestration tool such as Airflow for your ML pipeline.

These are the 3 key non-obvious benefits of using an orchestration tool such as Airflow for your ML pipeline that will:

- minimize errors

- maximize adding value

#𝟏. 𝐆𝐥𝐮𝐞 𝐚𝐥𝐥 𝐲𝐨𝐮𝐫 𝐬𝐜𝐫𝐢𝐩𝐭𝐬 𝐭𝐨𝐠𝐞𝐭𝐡𝐞𝐫

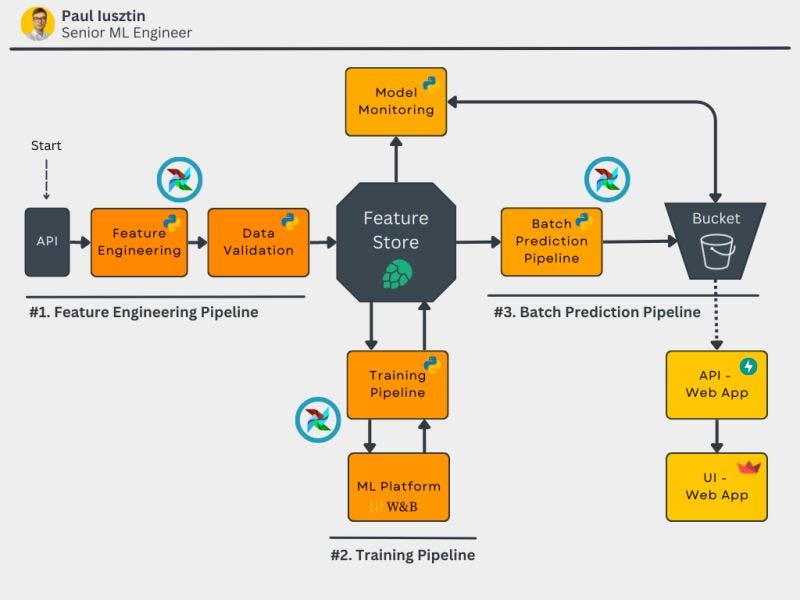

Most of the ML pipelines contain multiple scripts to run. In the example from my diagram, you run 6 scripts (yellow blocks) in a specific order and configuration.

Also, you might have decision points that introduce even more complexity—for example, running hyperparameter tuning or using the latest best configuration.

Using an orchestration tool (e.g., Airflow), you can easily connect all these components into a single DAG, which can be run with a button press.

Otherwise, you can easily perform the wrong step and lose hours wondering what you did wrong.

#𝟐. 𝐍𝐞𝐯𝐞𝐫 𝐮𝐬𝐞 𝐭𝐡𝐞 𝐰𝐫𝐨𝐧𝐠 𝐯𝐞𝐫𝐬𝐢𝐨𝐧𝐬 & 𝐩𝐚𝐫𝐚𝐦𝐞𝐭𝐞𝐫𝐬

As you can see in the diagram, almost every step from your ML pipeline will generate an artifact with its own version:

- dataset

- configuration

- model

- predictions

Most of the steps within the ML pipeline will require as input the versions of previously generated artifacts.

For example, to compute the predictions, you must specify the model version and features you want to use.

By manually setting all these versions, you can quickly introduce bugs.

We are humans, and at some point, you will forget to change or set the wrong version.

#𝟑. 𝐒𝐚𝐯𝐞 𝐭𝐢𝐦𝐞

Instead of doing tedious tasks, such as endlessly running various scripts, you can focus on tasks that add real value, such as:

- improving & scaling the solution,

- building new solutions,

- helping other people, etc.

By running your whole logic with a single call, your attention won't be distracted between multiple tasks, and you can easily focus on what matters.

Along with these 3 benefits, orchestrating your ML pipeline, you have the obvious benefits of:

- scheduling jobs

- monitoring the entire pipeline in a single place

- backfilling

To conclude…

By using an orchestration tool, you will:

1. Run your ML pipeline quicker.

2. Manage your versions easier.

3. Save time delegating boring tasks.

Which of these 3 is the most helpful for you?

Integrating Airflow with your Python code in a modular and flexible way.

When I initially learned how to use Airflow, the most challenging part was figuring out how to properly install my Python code inside Airflow without having to:

- copy my entire code inside Airflow

- duplicate code

- create weird dependencies

Basically, without creating spaghetti code.

The best solutions are to use either virtual environments or docker containers.

Let me give you a concrete example of how to quickly do this using Python, Poetry, and venvs.

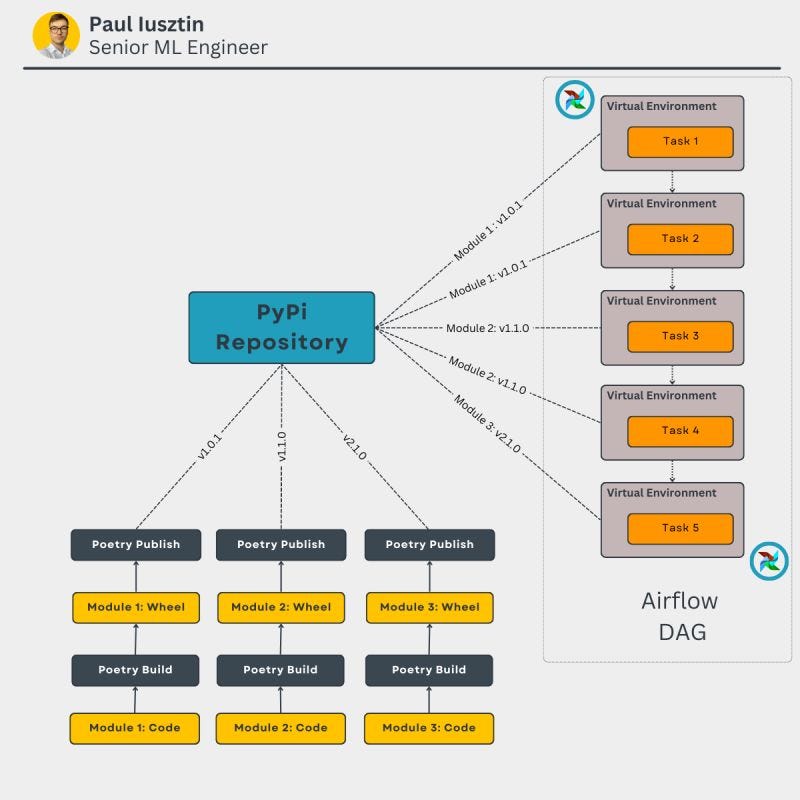

#𝟏. 𝐁𝐮𝐢𝐥𝐝 𝐲𝐨𝐮𝐫 𝐜𝐨𝐝𝐞

Using Poetry, you can quickly build your module into a wheel file using a single command:

```

poetry build

```

#𝟐. 𝐏𝐮𝐛𝐥𝐢𝐬𝐡 𝐲𝐨𝐮𝐫 𝐜𝐨𝐝𝐞 𝐭𝐨 𝐚 𝐏𝐲𝐏𝐢 𝐫𝐞𝐩𝐨𝐬𝐢𝐭𝐨𝐫𝐲

Again, using Poetry, you can quickly deploy your package to a PyPi repository using the following:

```

poetry publish -r <my-pypi-repository>

```

Note that you can host your own PyPi repository or publish it to the official one.

#𝟑. 𝐃𝐞𝐟𝐢𝐧𝐞 𝐲𝐨𝐮𝐫 𝐭𝐚𝐬𝐤 𝐚𝐬 𝐚 𝐏𝐲𝐭𝐡𝐨𝐧 𝐯𝐞𝐧𝐯

Using Airflow, you can define your task to use a venv instead of the systems Python interpreter.

You can do this using a Python decorator:

```

@ task.virtualenv(

requirements=[<your_python_module_1>, <your_python_module_2>, etc.]

...

)

You will specify your newly deployed Python package(s) in the requirements list.

That's it!

Poetry makes the process of building and publishing a Python package instantly simple.

You can also adapt this strategy using Docker. But instead of installing Python packages, you will install containers from a Docker registry.

So...

To deploy your Python code to Airflow, you have to:

- build it using Poetry

- publish it to a PyPi repository using Poetry

- install it inside a venv task

What strategy have you used so far? Leave your thoughts in the comments.

If you want to quickly:

understand how an orchestration tool such as Airflow is used within an ML system,

learn how to implement it with hands-on examples (code + learning materials).

Check out my Unlocking MLOps using Airflow: A Comprehensive Guide to ML System Orchestration article.

I know that you are busy people.

But, if you have more time to dive into the field, the Airflow article shared above is part of The Full Stack 7-Steps MLOps Framework course that will teach you how to design, build, deploy, and monitor an ML system using MLOps good practices.

The course is posted for free on Medium’s TDI publication and contains the source code + 2.5 hours of reading & video materials.

👉 Check it out → The Full Stack 7-Steps MLOps Framework

See you next week on Thursday at 9:00 am CET.

Have an awesome weekend!

💡 My goal is to help machine learning engineers level up in designing and productionizing ML systems. Follow me on LinkedIn and Medium for more insights!

🔥 If you enjoy reading articles like this and wish to support my writing, consider becoming a Medium member. Using my referral link, you can support me without extra cost while enjoying limitless access to Medium's rich collection of stories.

Thank you ✌🏼 !