Great AI engineers master this... embeddings!

The real fundamentals for becoming an AI engineer. Train from scratch ML models for e-commerce personalized recommenders

We’ve started posting on Substack’s feed, and… we are curious about your opinion!

This week’s topics:

Learning the real fundamentals for becoming an AI engineer

Great AI engineers master this... embeddings!

Train from scratch ML models for e-commerce personalized recommenders

Learning the real fundamentals for becoming an AI engineer

𝗔𝘀𝗽𝗶𝗿𝗶𝗻𝗴 𝗔𝗜 𝗲𝗻𝗴𝗶𝗻𝗲𝗲𝗿? The truth is you need to master 2 basic skills before touching any ML models.

The truth about being a top AI & MLOps engineer is not sexy.

In reality, you need 2 skills that have nothing to do with AI:

1. 𝗣𝗿𝗼𝗴𝗿𝗮𝗺𝗺𝗶𝗻𝗴: Learning Python is a perfect start.

2. 𝗖𝗹𝗼𝘂𝗱 𝗲𝗻𝗴𝗶𝗻𝗲𝗲𝗿𝗶𝗻𝗴: Learning to deploy your apps to a cloud such as AWS is critical in bringing value to your project.

𝘠𝘰𝘶 𝘯𝘦𝘦𝘥 𝘵𝘩𝘦𝘴𝘦 2 𝘣𝘦𝘧𝘰𝘳𝘦 𝘴𝘦𝘳𝘷𝘪𝘯𝘨 𝘔𝘓 𝘮𝘰𝘥𝘦𝘭𝘴 𝘪𝘯 𝘱𝘳𝘰𝘥𝘶𝘤𝘵𝘪𝘰𝘯.

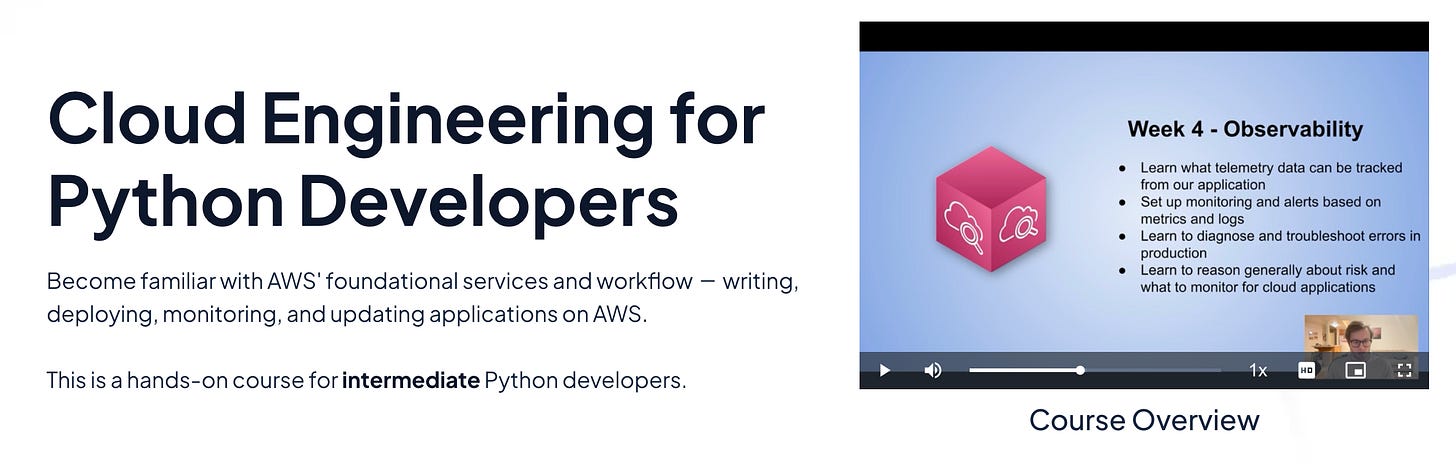

That's where the Cloud Engineering for Python Developers live course made by Eric Riddoch kicks in.

I have known Eric for almost a year, and I can certify that he is a brilliant cloud, DevOps and MLOps engineer.

𝘏𝘦𝘳𝘦 𝘪𝘴 𝘸𝘩𝘢𝘵 𝘺𝘰𝘶 𝘸𝘪𝘭𝘭 𝘭𝘦𝘢𝘳𝘯 𝘥𝘶𝘳𝘪𝘯𝘨 𝘵𝘩𝘦 𝘤𝘰𝘶𝘳𝘴𝘦:

enterprise-level AWS account management

the fundamentals of cloud engineering

design cloud-native RESTful APIs that cost-effectively scale from 4 to 4 million requests/day

write, test, locally mock, and deploy code using the AWS SDK and OpenAI

advanced observability and monitoring: logs, metrics, traces, and alerts

The principles learned during the course can easily be extrapolated from AWS to other cloud platforms such as GCP and Azure.

🕰️ The next cohort starts on Jan 6, and it provides:

weekly live classes

lifetime access to self-paced videos, labs and articles

certification of completion

I strongly recommend it lay down the SWE/DevOps foundations for AI/ML/MLOps engineering.

To get 10% off, use the following code when registering: DECODINGML

Eric also offers a scholarship program that can significantly reduce the price.

If you consider buying the course, please use the DECODINGML code to support my work.

Great AI engineers master this... embeddings!

Embeddings are the cornerstone of AI, ML, LLMs, you name it. But what are they, and how do they work?

Embeddings are the cornerstone of many AI and ML applications, such as GenAI, RAG, recommender systems, encoding high-dimensional categorical variables (such as input tokens for LLMs) and more.

For example, in an RAG application, they play a pivotal role in indexing and retrieving data from the vector DB, directly impacting the retrieval step.

They are present in almost every ML field in one form or another.

So… What are embeddings?

Imagine you're trying to teach a computer to understand the world.

Embeddings are the translators between the external world (text, images, videos) and the virtual representation that ML models can understand.

This virtual representation is based on vectors (or embeddings).

These vectors aren't random, though.

Similar words (or images or anything else) end up with vectors close to each other in the embedding space. It's like a map where words with similar meanings are clustered together.

For example, the embeddings of 𝘈𝘥𝘶𝘭𝘵 & 𝘊𝘩𝘪𝘭𝘥 and 𝘊𝘢𝘵 & 𝘒𝘪𝘵𝘵𝘦𝘯 form two groups.

Also, you can find various powerful relationships by computing the difference between embeddings.

For example, "𝘈𝘥𝘶𝘭𝘵 - 𝘊𝘩𝘪𝘭𝘥 = 𝘵𝘩𝘦 𝘳𝘦𝘭𝘢𝘵𝘪𝘰𝘯𝘴𝘩𝘪𝘱 𝘣𝘦𝘵𝘸𝘦𝘦𝘯 𝘵𝘩𝘦 𝘱𝘢𝘳𝘦𝘯𝘵 𝘢𝘯𝘥 𝘪𝘵𝘴 𝘤𝘩𝘪𝘭𝘥." Let's label the difference as R.

Now, we can do: "𝘊𝘢𝘵 + 𝘙" to find the embedding for a 𝘒𝘪𝘵𝘵𝘦𝘯.

We can use these relationship vectors for almost anything:

gender

status

body movements

To evaluate the quality of the embeddings, you often visualize them in 2D or 3D space.

As embeddings have more than 2 or 3 dimensions, usually between 64 and 2048, you must project them to 2D or 3D, using algorithms such as UMAP or t-SNE.

For more insights into embeddings, consider reading our article on embeddings:

Train from scratch ML models for e-commerce personalized recommenders

If you want hands-on experience with a cutting-edge recommender system project, I have good news for you...

We've just released the third lesson of our 𝗛𝗮𝗻𝗱𝘀-𝗼𝗻 𝗛&𝗠 𝗥𝗲𝗮𝗹-𝗧𝗶𝗺𝗲 𝗣𝗲𝗿𝘀𝗼𝗻𝗮𝗹𝗶𝘇𝗲𝗱 𝗥𝗲𝗰𝗼𝗺𝗺𝗲𝗻𝗱𝗲𝗿 𝗰𝗼𝘂𝗿𝘀𝗲 written by Anca Ioana Muscalagiu who has constantly contributed to Decoding ML.

In this lesson, we explore the training pipeline for building and deploying effective personalized recommenders.

By the end of it, you'll learn how to:

Master the two-tower network architecture used in cutting-edge real-time recommenders.

Leverage Hopsworks feature store for loading the training dataset optimized for high throughput.

Train and evaluate the two-tower network and ranking model.

Upload and manage models in the Hopsworks model registry.

Use MLOps best practices.

🔗 Get started with Lesson 3:

Thank you, Anca Ioana Muscalagiu, for writing this fantastic lesson!

Images

If not otherwise stated, all images are created by the author.

Very Helpful Article

Thanks for sharing insights